Alibaba researchers introduce ZeroSearch, a method for training LLMs without using search APIs. It replaces real-time search with a document simulator to train QA models using multi-step reasoning. A 14B model trained this way outperforms Google Search-powered baselines on standard benchmarks.

Training without external tools

ZeroSearch removes the need for search APIs or browser-based tools during model training.

Trains policy models using simulated documents instead of real search results

Avoids API costs and latency by removing search engine dependencies

Simulation uses prompted LLMs or fine-tuned 3B, 7B, or 14B models

Policy learns to reason through multi-step QA using only internal context

Simulation engine setup

The simulation engine generates noisy retrieval documents from intermediate queries.

Generates up to 20 documents per query using language models

Varies document quality to simulate realistic and imperfect retrieval

Uses reward signals to rerank documents based on final answer quality

Fine-tunes separate 3B, 7B, and 14B models to serve as simulators

Performance across benchmarks

ZeroSearch models outperform retrieval-based and search-free baselines on QA tasks.

- 14B model beats Search-R1 (Google Search + policy) on Natural Questions

- 7B model matches Search-R1 while remaining fully search-free

- 3B model outperforms prior non-search baselines

- Shows stronger generalization on TriviaQA and PopQA (out-of-domain benchmarks)

Learning with curriculum rollout

Training uses a curriculum that gradually increases retrieval noise.

Early stages use high-quality, relevant documents to stabilize learning

Later stages inject irrelevant or conflicting data to improve reasoning

Policy adapts to ambiguity and incomplete information

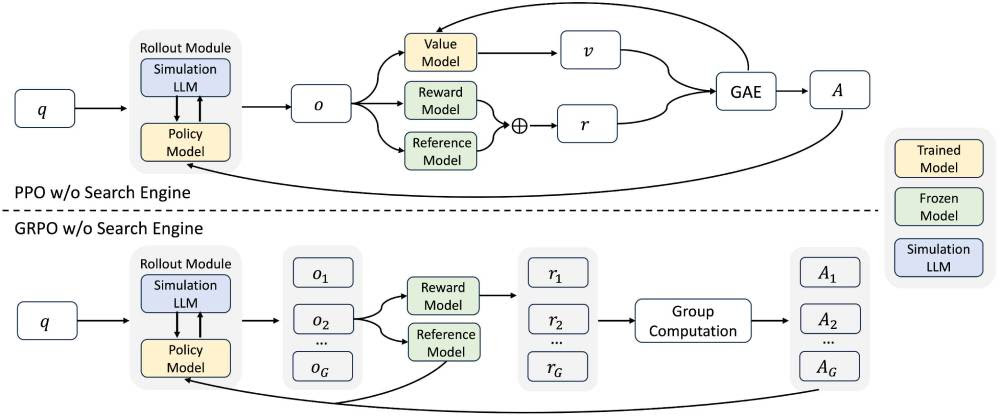

Reinforcement learning (PPO) improves final answer accuracy using delayed rewards

Access and availability

GitHub repo includes training code, simulator prompts, and evaluation scripts

Models trained with ZeroSearch are not yet publicly released